Over the last year, the SEO community has become increasingly focused on AI-powered search platforms such as ChatGPT, Gemini, Perplexity and many others. As usage of these tools grows, concerns about declining reliance on traditional search have pushed many SEOs to go all-in on optimising for AI Search.

We also discussed the impact of AI and how it has evolved SEO in a recent Beyond SEO podcast episode.

From website migrations and data integrity to server-side tracking and the impact of AI on search, this episode shows how Growthack approaches SEO differently and why brands are shifting away from the traditional agency model.

In 2025, we saw a predictable increase in new tactics, frameworks, and checklists promising better visibility in AI-driven answers.

This guide is therefore for anyone who’s been asked:

- Do we need to do AI SEO now?

- Or should we be optimising for ChatGPT?

It aims to cut through the noise and clarify if anything* ACTUALLY changed when it comes to ranking in AI-powered search environments.

Whether you’re an SEO, marketing leader or business founder, we’ve got an unbiased take for you.

Here are some top-level key takeaways.

| Audience | Takeaways |

|---|---|

| SEOs | Don’t panic. The fundamentals that underpin AI answer generation remain the same core elements: information architecture, content, and links/mentions. |

| Marketing leaders | Human-written content represents 86% of Google results and 82% of AI citations. Priorities original research, proprietary data, and multi-platform video distribution. These assets deliver value in both traditional and AI-powered search contexts. |

| Founders | 95% of ChatGPT’s 462 million users also actively use Google. Ask one question: Will an AI be able to access + trust + repeat our story? If not, fix crawl access, consistent brand facts, and get legit third-party mentions. |

SEO vs GEO vs AEO

The question is how much of AI SEO is genuinely new? Because the fundamental SEO techniques, such as keyword strategy, high-quality content, establishing authority, solid technical architecture and ensuring clear messaging, remain the same.

Traditional SEO = visibility in standard search results (list of blue links on Google, Bing, Yahoo)

- Example: You search ‘billing software for enterprises’ and see blog posts and comparison pages ranking. Those are what we call keyword-optimised landing pages.

AEO = visibility in answer-style search within traditional search results

- Example: You ask ‘What’s the best billing software for enterprises?’ and get a concise answer box listing 1-10 tools with short explanations. These are structured pieces of content that answer a question directly.

GEO = visibility in generative AI platforms (ChatGPT, Gemini, Claude, Perplexity)

- Example: You ask an AI assistant ‘What billing software should a company use with 500+ employees?’ The AI recommends a tool and explains why. This is where your product is part of the AI’s reasoning and narrative.

Nathan Gotch (SEO Professional) recently pointed out the differences between SEO and AEO search, which many found helpful and generally agreed with. From tracking, personalisation, local pack, query fan-out and many other. Find the full discussion on LinkedIn

What’s emerging is not simply an evolution of NEW* SEO, but a growing separation between traditional search optimisation and the new so-called GEO (Generative Engine Optimisation) and AEO (Answer Engine Optimisation).

This also sparks curiosity among the audience, prompting further exploration, as evidenced by Google Search’s autocomplete feature and its product, YouTube.

Teams are increasingly asking whether they should treat these as distinct channels, how they differ from SEO, and what actions genuinely influence visibility in AI-driven environments.

Wil Reynolds (SEO Professional) highlighted how modern channel growth is breaking the clean measurement model many teams are used to and the budgets may need to shift to reflect that reality: ‘While companies may currently track 80% of their digital marketing and leave 20% untracked, the ratio might need to shift closer to 60:40 or 50:50, though this doesn’t mean you have to fly completely blind.’ Find the full discussion on LinkedIn.

Similarweb data reveals a nuanced reality: rather than displacing Google, ChatGPT has created a complementary search ecosystem. With 95% of ChatGPT’s 462 million users also actively using Google, we’re witnessing the emergence of dual-mode search behaviour where users strategically toggle between platforms based on task requirements.

According to a recent Graphite.io 2025 report, AI-generated content is increasing across the web, but human-written articles still dominate major search and AI platforms. The study found that human content makes up 86% of Google Search results, and 82% of the articles cited by both ChatGPT and Perplexity, with AI-generated content representing only 14-18% depending on the platform.

Now let’s see the tactics you’ve been waiting for…

1. Use structured data/schema markup

The claim: Structured data helps AI systems interpret content types, relationships, and key facts, which increases the likelihood of accurate extraction, citation, and inclusion in AI-generated answers and summaries.

The reality: There are no black and white answers to how it helps to rank in an AI platform. However, this is not new and has been utilised in the past, mainly for rich snippets.

The concept of adding machine-readable meaning to web pages was part of Tim Berners-Lee’s vision for the Semantic Web in 1999. As the internet grew, with millions of users and businesses online, search engines needed a reliable way to extract meaning from the increasingly complex and large body of web pages.

Use of schema markup has been growing steadily for nearly a decade.

Data Source: Web Data Commons | Microdata, RDFa, JSON-LD, and Microformat Data

John Mueller, Search Advocate at Google has confirmed that structured data won’t make a site rank better. Find the full post on Bluesky

But does this mean that structured data has no purpose? Here are 2 success stories of using structured data.

Structured data implementation led to +2.7x search traffic and +1.5x session duration.

Google leveraged structured data as early as 2005-2009 through microformats and RDFa. Google announced the extraction of structured data from microformats and RDFa in May 2009. This allows Google to display the content in search results with a richer appearance, known as a rich result. Currently, there are 33+ different types of rich results in which a site can appear.

Here are some examples:

- Article

- Breadcrumb

- Carousel

- Course list

- Dataset

- Discussion forum

- Education Q&A

- Employer aggregate rating

- Event

- FAQ

- Image metadata

- Job posting

- Local business

- Math solver

- Movie

- Organization

- Practice problem

- Product

- Profile page

- Q&A

- Recipe

- Review snippet

- Software app

- Speakable

- Subscription and paywalled content

- Vacation rental

- Video

And Google advocates for the adoption of structured data in an AI search world.

Find the full post on X (Twitter)

Mark Williams-Cook (SEO professional with 22+ years of experience) said LLMs work by ‘tokenising’ content. That means taking common sequences of characters found in text and minting a unique ‘token’ for that set. The LLM then takes billions of sample ‘windows’ of sets of these tokens to build a prediction on what comes next. Of course, LLMs could be using schema in some kind of RAG process. Find the full discussion on LinkedIn

Schema markup helps Microsoft’s LLMs understand the content of web pages. A fact confirmed by Fabrice Canel, Principal Product Manager at Microsoft Bing. And ChatGPT leverages the Bing search index. Let that sink in. Find the full discussion on LinkedIn

2. Break content into small, machine-extractable chunks

The claim: Short paragraphs, lists, FAQs, and clearly separated sections make content easier for AI systems to scan, extract, and reuse as discrete answers in generated responses.

The reality: This is true but not new. This method also benefits us humans.

Also, usability research showed people scan web content and that scannable writing performs better.

- 79% of users scan pages rather than reading word-by-word.

- A study comparing five writing styles showed significant usability improvements: the site scored 58% higher with concise content, 47% higher with scannable text, and 27% higher when an objective style was used over a promotional one.

Eyetracking research reveals four primary webpage text scanning patterns: F-pattern, spotted, layer-cake, and commitment.

When AI products provide grounded answers with citations, they often rely on retrieval-augmented generation (RAG). OpenAI’s documentation for GPT knowledge retrieval describes a RAG pipeline in which uploaded files are chunked, embedded, stored in a vector database, and semantically retrieved at query time.

Image source: Retrieval Augmented Generation (RAG) and Semantic Search for GPTs

And this isn’t just OpenAI. Microsoft’s Copilot ecosystem similarly grounds generative answers by retrieving relevant information from connected enterprise and web-based knowledge sources. In Copilot Studio, generative answers use retrieval-augmented techniques and semantic search to pull precise context from those sources at runtime. As with other RAG-based systems, using smaller, well-scoped content blocks improves retrieval precision, supports clearer citations, and reduces the risk of topic misattribution.

The Chroma research shows that content chunking is not a stylistic preference but a retrieval-level variable: how content is segmented directly affects which tokens are retrieved and therefore which facts an AI system can reuse in grounded answers.

Image source: Evaluating Chunking Strategies for Retrieval

Nikki Pilkington (SEO Consultant) brilliantly debunks the ‘chunking’ myth, revealing it as repackaged SEO fundamentals she’s taught since 2009. She’s right: SEOs can’t control actual chunk optimisation as it’s AI engineering. The solution? Focus on what’s always worked: clear, focused, self-contained sections. Find the full discussion on LinkedIn

3. Use clear semantic heading hierarchies

The claim: Logical H1 → H2 → H3 structures help AI understand topical hierarchy, summarise sections accurately, and identify which parts of a page answer specific questions.

The reality: This traditional set of best practices has been employed for decades by SEOs and Developers. While this fact is true, unfortunately* it offers nothing new.

A foundational paper on using HTML structure in IR found that terms in structural elements like titles and headings carry additional semantic weight for retrieval ranking and relevance compared with plain body text. Specifically, the research grouped HTML tags (Title, H1 → H2, H3 → H6) into distinct classes for indexing and demonstrated that this structural information improved retrieval performance compared to ignoring such structure.

As AI agents increasingly parse, extract, and summarise pages non-visually, the same structural clarity reduces ambiguity, improves extractability, and makes content more resilient across systems. This doesn’t guarantee inclusion or ranking but it creates a foundation machines can use instead of guesswork.

Some common semantic elements include the following:

- Headings <h1> → <h2> → <h3>

- Paragraphs <p>

- Tabular data <table>

- Links or anchors (<a>)

- Images (<img>)

- Sections (<section>)

- Asides (<aside>)

Jono Alderson (Technical SEO Consultant) published an excellent piece on importance of semantic HTML. Semantic HTML matters because it encodes intent, hierarchy, and boundaries in a way machines can rely on without inference.

4. Allow AI crawlers to access your content

The claim: Content that AI crawlers cannot access or retrieve cannot be surfaced, referenced, or cited in AI search results or generated answers.

The reality: This is true but also seems like a matter of common sense: how could an AI crawler access your site without permission?

By the end of 2024, 67% of the top news websites had already blocked AI chatbots, forcing them to rely on lower-quality sources. This trend is accelerating, with 79% of top news sites now blocking AI training bots via their robots.txt file as we approach the end of 2025.

In 2025, Cloudflare launched a ‘pay-per-crawl’ model, allowing content owners to charge AI crawlers for access. This initiative provided benefits for publishers.

- Set domain-wide pricing with per-crawler control

- Monetise AI access without needing leverage or relationships

- New revenue stream from AI training and inference

AI-bot traffic shows that AI search and answer systems rely on direct crawler access, meaning content that is blocked from these bots cannot be retrieved, grounded, or cited in AI-generated results.

Date Source: Cloudflare

5. Optimise pages for fast and clean rendering

The claim: AI systems often rely on static HTML and limited rendering. Thats makes clean markup, fast server responses, and minimal JavaScript critical for reliable content extraction.

The reality: This is largely true as AI crawlers typically discover and index content from the initial HTML served by the server.

While search engines like Google have advanced JavaScript rendering capabilities. Many AI crawlers currently do not execute JavaScript, likely due to the computational cost and complexity of browser-based rendering at scale. Meaning content added only via client-side rendering may be invisible to them. Server-side rendering or pre-rendering ensures key content appears in the HTML the crawler fetches, improving crawlability and increasing the likelihood that AI systems can extract and reference that content.

You can see how Google handles JavaScript web apps in three major phases: crawling, rendering, and indexing.

Image Source: Understand JavaScript SEO | Google Search Central

6. Answer long-tail conversational queries

The claim: AI search favours natural-language, question-based queries. Means content that directly answers conversational prompts more likely to be selected for generated responses.

The reality: If you’ve been involved in SEO for long enough, you’ll know that targeting keywords with low competition is a long-standing and effective practice.

This approach led to the discovery of long-tail terms. These terms are not new and have been utilised in various ways for a long time, such as in FAQs on landing pages, for writing blog posts, and for creating highly specific landing pages.

Research in AI search and retrieval increasingly treats natural language and conversational inputs as primary signals of user intent rather than relying solely on keyword matching. Work on conversational search and neural information retrieval shows that richer, long-tail queries can provide valuable contextual signals that improve retrieval quality when properly modelled. As a result, content that directly addresses these contextualised information needs is more likely to be retrieved, surfaced, and used in downstream answer generation.

Eli Schwartz (SEO & Growth Advisor) nails why ‘long-tail keywords’ deserves retirement. The term diminishes what should be every brand’s primary focus. In AI search especially, vague broad terms mean nothing while specific queries signal ready-to-convert users delivering real ROI. Find the full discussion on LinkedIn

7. Lead pages with summaries or TL;DRs

The claim: Opening summaries help AI quickly identify primary topics and key conclusions. Increases the chance that content is quoted or summarised in AI answers.

The reality: Given the rapid pace of digital content consumption, the need for concise content has grown due to shorter attention spans.

Data Source: Microsoft Attention Spans Research Report

Originally appearing on forums like Reddit, the acronym TL;DR (Too Long; Didn’t Read) was used by users to provide a brief summary of a lengthy post. Today, TL;DR serves to give a quick overview of the main ideas for those who lack the time or patience to read the entire text.

A user on Reddit shared this results from a simple UX improvement: adding TL;DR summaries to articles and product pages increased conversions by +33%. The two-week test proved that giving visitors instant access to key information without forcing them to scroll.

Image Source: How Adding TL;DR Boosted My Conversions by 33%

Research in Information Retrieval demonstrates that abstract-like and query-biased summaries significantly improve both the speed and accuracy of relevance judgments. The findings provide a strong theoretical and empirical foundation for the use of opening summaries or TL;DRs nowadays for AI.

LLMs are strongest at the edges of context. Research shows that even modern long-context models struggle to reliably retrieve and use information placed in the middle of large inputs, while performing much better when key details appear at the beginning or end. A U-shaped attention pattern is known as the “lost in the middle” effect.

Image source: How Language Models Use Long Contexts

Michael King (Marketing Technologist) highlighted Why Language Models Hallucinate paper from the OpenAI team and summarised ‘The TL;DR is models hallucinate because training and evaluation reward guessing, not admitting uncertainty. Even with perfect data, statistical pressures and binary scoring drive models to bluff.’ Find the full discussion on LinkedIn

8. Build strong internal linking structures

The claim: Internal links clarify topical relationships, improve crawlability, and help AI systems understand how related content fits together within a subject area.

The reality: The logic mirrors how SEOs have used internal links to reinforce relevance and relationships between pages long before AI search became mainstream.

Daniel Foley Carter (SEO Specialist with 25+ years of experience) said Internal links are CRUCIAL for good SEO yet there’s a lot more emphasis on external links. Find the full discussion on LinkedIn

Google has long confirmed the importance of internal linking. Google’s own documentation has consistently emphasised internal linking as essential for:

- Crawling and discovery

- Understanding page relationships

- Establishing topical importance

- Links act as signals of importance

- Anchor text provides semantic context

- Internal links distribute relevance across a site

Image Source: Link best practices

9. Build high-quality backlinks and mentions

The claim: AI systems are more likely to reference a source in their generated answers when that source has authoritative backlinks and credible mentions, which act as signals of its trust and relevance.

The reality: Major advances in web search during the late 1990s leveraged the hyperlink graph as a key signal. For instance, Google’s original system explicitly ‘makes heavy use of the structure present in hypertext.’

Similarly, Kleinberg’s HITS algorithm formalised the concepts of ‘authoritative sources’ and ‘hub pages’ based on link structure.

Furthermore, link structure was utilised for trust and anti-spam purposes, as seen in TrustRank, which propagated ‘reputable’ signals from known good pages.

That’s the technical lineage behind ‘authoritative backlinks’ as a trust/authority signal.

Jono Alderson (SEO Consultant) shared a fantastic article on There’s no such thing as a backlink. LLMs and AI agents don’t think in backlinks at all. They parse the web as a network of entities, concepts, and connections. They care about how trust, authority, and relevance propagate along the edges.

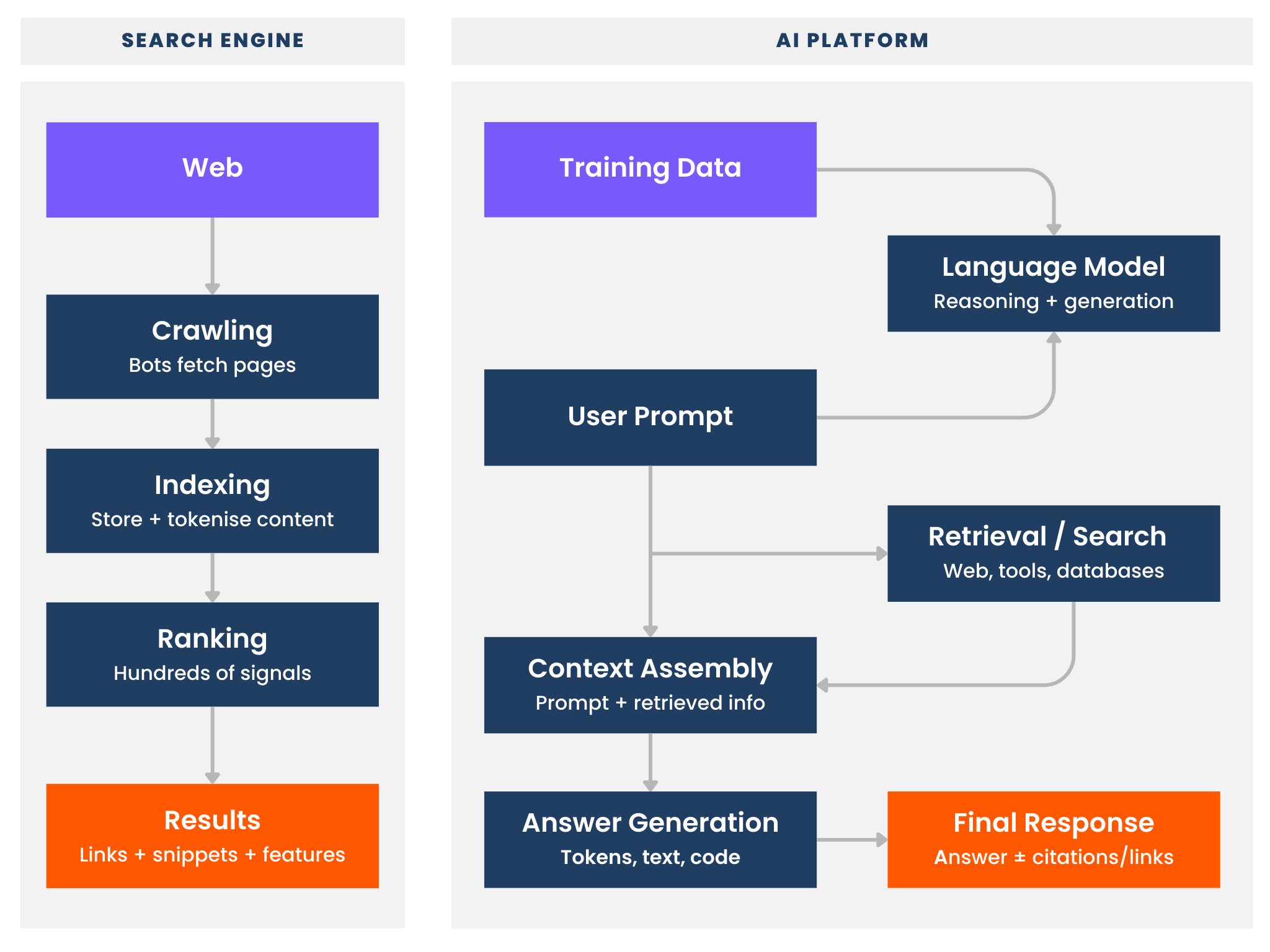

Most AI search experiences that cite sources work like this:

- They retrieve candidates from an index (often search-index-like, sometimes enterprise search).

- They rank/rerank candidates.

- They generate an answer using the retrieved sources (and often cite them).

Backlinks/mentions matter mainly at steps 1 and 2. If the retrieval/ranking system treats links/mentions as signals of quality/authority, your page is more likely to be ranked high enough to be retrieved. Google says prominent sites linking/referring is a quality factor.

Patrick Stox (Technical SEO) tweeted about what he heard at the search conference in 2024 that Google needs very few links.

Gary Illyes, Analyst at Google later replied ‘I shouldn’t have said that… I definitely shouldn’t have said that‘

Here are 3 successful stories of how links helped before this AI apocalypse.

- +243% growth in organic traffic with link-building campaigns

- +193% increase in organic traffic with link-building

- +275% growth in organic traffic with link-building

10. Maintain consistent brand messaging across the web

The claim: Consistent brand facts, descriptions, and positioning reduce ambiguity and help AI systems form stable, accurate representations of an entity.

The reality: Yes, and it’s absolutely is important. 9/10 times you have the control over what you say on your website. Make sure the messaging across key pages like homepage, about, products, contact are consistent.

What is often characterised as AI-level understanding of entities is, in practice, a problem of large-scale data integration. Truth discovery research addresses the challenge of resolving inconsistencies across multiple information sources.

Visualisation credit: Security and Privacy Academy | Record Linkage Explained

Record linkage (entity resolution) exists because real-world records are noisy. The same entity can appear with inconsistent or missing attributes, and different entities can look similar. Linkage accuracy improves when records contain accurate, distinctive identifiers, because agreements on strong/rare attributes provide higher evidence for a match.

These systems infer likely truths by jointly estimating source reliability and the degree of agreement among sources. When factual claims about an entity are consistently presented across independent, reputable sources, they generate stronger agreement signals. Which increases the likelihood that those claims are inferred and selected as true.

A research by AirOps showed when users use AI search for commercial discovery, the majority of brand mentions are attributed to third-party sources. Which outnumbering brand-owned citations by more than six to one. Brands that build a strong owned-content foundation and earn external recognition increase their visibility in AI search.

11. Build entity relationships using entity-rich language

The claim: Naming and describing entities (people, brands, products, concepts) helps AI map content into knowledge graphs, improving retrieval accuracy.

The reality: Okay, at this point, we are overcomplicating SEO.

Why you have done entity SEO without meaning to, because people naturally explain things the way humans understand them, not like SEO robots.

For example, someone might write: “Nike is a sportswear brand founded by Phil Knight. The company is known for athletic shoes and apparel.”

That person wasn’t doing SEO. They were just being clear and specific. But to a search engine, that sentence:

- Identifies Nike (company)

- Connects it to Phil Knight (person)

- Links it to sportswear, shoes, apparel (related concepts)

That’s entity SEO.

So when relationships between entities are stated explicitly, they can be represented directly as RDF statements (subject-predicate-object triples) in an RDF graph. This makes those links machine-interpretable for consistent querying (e.g., SPARQL graph patterns) and where an entailment regime is used for formal inference over the stated facts. Leading to improving how systems connect related concepts in generated outputs.

In Microsoft’s Bing Q&A architecture, answers are produced by combining structured knowledge from a knowledge graph (e.g., Satori) with information extracted from web documents/web free text (and other feeds), supported by query understanding and document understanding.

Image Source: Question Answering at Bing Presentation

Dan Hinckley (Chief Product & AI Officer) shared a tip on analysing AI Overviews through Natural Language Processing tools can reveal which entities (people, organisations, and concepts) search systems prioritise for specific topics. Find the full discussion on LinkedIn

12. Secure listings on authoritative directories

The claim: Authoritative directories and reference sites provide structured, trusted data that AI systems frequently use when assembling factual answers.

The reality: Despite ongoing changes in search, business directories are still critical for local SEO.

- 82% of consumers trust online directories when evaluating a new business.

- Business directories represent 31% of local-intent organic search results.

- 33% increase in monthly website traffic in Q1 2025 for businesses that are listed on top directories.

Unlike traditional search, where results are ranked by relevance and links.

AI-powered search (such as Google’s Search Generative Experience, Bing Chat, ChatGPT with browsing, and engines like Perplexity) works by aggregating and synthesising information from multiple sources to answer a query.

These systems don’t rank webpages for the user. Instead, they select and compile facts. As a result, the sources that an AI pulls from become extremely important for whether and how your business or website is mentioned in the answer. Directory listings come into play as pre-vetted sources of structured information that AI models trust. Research by BrightLocal found that ‘all LLMs are using directories and citations for business information across every industry.’

Ann Smarty (SEO Specialist) explains that It is important to know which brands your business is often listed with because that defines your brand’s relevance and visibility. It is not just about backlinks anymore. Co-citations can also refer to unlinked brand mentions, which are also used by Google and AI algorithms. Find full post: Co-Citation & Co-Occurrence in AI-Driven SEO

13. Publish original research and first-party data

The claim: Original studies, proprietary data, and unique statistics increase credibility and are more likely to be cited than duplicated or generic content.

The reality: This isn’t an AI SEO trick. It’s how knowledge has always worked.

Long before researchers, journalists, and academics operated by a simple principle: go to the original source. If you wanted to write about economic trends, you cited the economist who ran the numbers. If you were discussing a historical event, you referenced primary documents or the historian who uncovered new evidence.

This was never about gaming algorithms. It was about intellectual integrity and practical necessity.

Lily Ray (VP of SEO Strategy and Research) highlighted an interesting example: ebsco.com is rapidly growing in SEO and AI Search visibility, primarily from their /research-starters/ subfolder launched earlier this year. Their Research Starters section functions like an encyclopedia, offering definitions curated from highly trustworthy, authoritative sources. Every article includes a bibliography referencing the authoritative sources used. Find the full discussion on LinkedIn

Original research and proprietary data are rare by definition. When you’re the only source for a particular statistic or finding, others must cite you if they want to reference that information. There’s no alternative. You’ve created something that didn’t exist before.

Generic content, on the other hand, exists in countless variations. There’s no compelling reason to cite any particular version. SEO just made this visible

What changed with search engines is that this age-old dynamic became quantifiable and scalable. Links became the digital equivalent of academic citations. Google’s algorithm essentially automated what librarians, editors, and researchers had always done: recognise that original sources carry more authority than derivative summaries.

The websites that win in search aren’t gaming the system. They’re following the same principles that made certain books essential references in libraries, certain journals authoritative in academia, and certain experts quotable in journalism. They’re creating something worth referencing rather than rehashing what already exists.

14. Distribute content on AI-crawled platforms

The claim: AI systems frequently reference external platforms such as forums, social networks, and video sites, making off-site distribution critical for visibility.

The reality: Since OpenAI began partnering with platforms like Reddit, we’ve observed concerning trends. SEOs quickly realised these platforms were being used as training sources. Leading to manipulative practices that have degraded content quality across these communities.

Data Source: Semrush | Google AI Mode vs. Traditional Search & Other LLMs [Study]

We do not recommend running aggressive black hat SEO campaigns on forum platforms for AI visibility. Even with content on these platforms, there’s no guarantee of AI visibility.

Access ≠ selection.

AI systems use complex ranking, retrieval algorithms, and content policies to determine what surfaces in responses.

There are definitely ways you can approach this strategically. As Ethan Smith, CEO of Graphite in a Interview with Lenny Rachitsky said ‘find a thread that’s part of an LLM citation you want to show up in. Say who you are and where you work. Give a useful answer.’ Find the full video.

15. Optimise video content for AI citation

The claim: Well-structured video content with metadata, captions, and context is more likely to be referenced, summarised, or cited in AI-generated answers.

The reality: Video content is becoming critical for search visibility.

Lower quality video > Low quality written content

Google’s partnership with Instagram now allows search engines to display public posts directly in results pages, making video distribution across multiple platforms essential for discoverability.

While YouTube optimisation has been a long-standing SEO practice with established tactics, the AI search era hasn’t fundamentally changed YouTube SEO itself.

According to Wyzowl’s video marketing statistics:

- YouTube dominates with 82% of businesses using it for video distribution

- LinkedIn follows at 70%

- Instagram at 69%

- Facebook at 66%

- Webinars at 56%

- TikTok at 40%

Brands should prioritise video creation across multiple platforms. The multi-platform approach ensures visibility across traditional search, AI-powered answers, and social discovery algorithms. All of which increasingly favour video content that demonstrates genuine value and engagement.

And we have taken our own advice with the Beyond SEO Podcast.

Conclusion

The bottom line? There is no secret AI SEO playbook. The tactics being marketed as GEO or AEO innovations are foundational SEO practices that have existed for years.

The search behaviour is evolving, and the fundamentals remain unchanged except for tracking as of now. If you’re already doing SEO well (going beyond Google’s blue links), you may already be prepared for AI search.

About Growthack

Growthack specialises in building SEO systems that drive revenue by combining technical, content, and data-driven insights. No hype. No handovers. Award-winning SEO that helps your brand stay discoverable, trusted, and ready for what’s next.

Here are just a few of our clients’ results: